NVLink vs. UALink vs. NeuronLink vs. SUE vs. PCIe – How Large is Astera Labs (ALAB US) Switch Opportunity?

I first introduced Astera Labs, a U.S.-listed company specializing in PCIe retimer and switch chips (Astera Labs (ALAB US) – How Large is the GB200 Content Opportunity?), in an article last year. Recently, investor interest has surged in Astera Labs’ upcoming custom Scorpio-X switch chip. Therefore, I will explain the company’s growth potential surrounding the Scorpio-X switch chip today.

Astera Labs’ growth drivers here mainly come from two areas: one is the custom NeuronLink switch chip to be launched in the second half of this year for AWS’s Trainium series; The other is the custom UALink switch chip for AMD’s MI400 series, expected in the second half of next year. Before diving into these two switch chips, let’s first understand several different chip interconnect communication protocols currently available in the market, including: NVIDIA’s NVLink, AWS’s NeuronLink, Broadcom’s SUE (Scale-Up Ethernet), AMD-led UALink (Ultra Accelerator Link), and Intel-led PCIe (Peripheral Component Interconnect Express) protocol.

First of all, it is important to understand that UALink uses SerDes (Serializer/Deserializer) with differential signaling, meaning data is transmitted through two lines carrying mirrored signals — one high and one low. This design provides stronger resistance to interference, allowing for longer-distance data transmission. In contrast, NVLink C2C uses single-ended signal SerDes, transmitting data through only one line. The main advantage of single-ended signaling is that it significantly saves chip area. For example, NVIDIA’s single-ended signaling allows one lane to use just one line (versus two lines in UALink SerDes), saving about 80% of chip area, or enabling 80% more bandwidth within the same chip area. This technological advantage is widely acknowledged in the industry, but only NVIDIA has successfully implemented and patented it, applying it to NVLink and NVLink Fusion. Traditionally, single-ended signaling is limited to speeds around 200–300 Mbps, but NVIDIA has pushed it to 900 GB/s by introducing a very clean ground reference (i.e. ground-referenced signaling technology). However, the biggest drawback of this technology is its inability to support long-distance data transmission due to susceptibility to interference. As a result, NVIDIA only adopts GFS single-ended signaling for communication between chips within the same node (i.e., NVLink C2C), while inter-node chip connections still uses differential signaling.

The UALink (Ultra Accelerator Link) protocol comes in two versions: 128 Gbps and 200 Gbps. UALink originated from the PCIe (Peripheral Component Interconnect Express) protocol but incorporated Ethernet architecture to widen the data transmission "highway" at the physical layer, resulting in UALink 1.0 — the 200 Gbps version. Though UALink 200G is fast, it lacks flexibility and is suitable only for GPU-to-GPU connections, not for heterogeneous computing environments. Thus, a 128 Gbps version was developed. UALink 128G uses PCIe Gen7’s physical layer technology but applies UALink at the transport layer, supporting dual-mode operation.

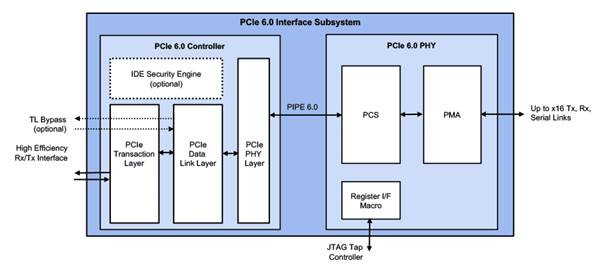

In simple terms, PCIe IP consists of a controller and a PHY: the controller handles protocol processing, while the PHY handles physical signal transmission (see diagram below). Internally, the controller has several layers, but for simplicity, since UALink 128G shares the same front-end PHY as PCIe Gen7 (both at 128 Gbps), it can switch interfaces between two different controllers at the back end, achieving dual-mode operation. That is, it can switch to either the PCIe Gen7 controller or the UALink controller when needed.

The key difference between UALink 128G and UALink 200G lies in their application: UALink 200G resembles Broadcom’s SUE or NVIDIA’s NVLink and leans towards GPU interconnection, not supporting heterogeneous devices, making it more suitable for model training. In contrast, UALink 128G is more like a PCIe scale-up approach. Although UALink 128G appears slower in single-lane speed, it supports overclocking, mixed connections between CPU, storage pools, and other computing devices, and is compatible with PCIe Gen7, supporting dual-mode operation — making it more suitable for model inferencing.

Broadcom’s SUE (Scale-Up Ethernet) is yet another interconnect communication protocol. Despite of its name, SUE only uses the physical layer of Ethernet and its upper layers are completely different from traditional Ethernet protocols, stripped down to just two and a half layers with no TCP/IP, UDP, or other packet processing or flow control mechanisms. SUE is point-to-point and draws from NVLink's transmission logic while leveraging Ethernet’s speed, achieving more efficient data transmission. It's similar to connecting laptops via direct cable and running a thin Ethernet layer. However, this approach has limitations, such as weaker performance in heterogeneous expansion compared to UALink.

AMD’s upcoming Helios AI rack and MI400 series GPUs will adopt the new UALink 200G protocol. AMD has formed a team to develop a switch chip that pairs with two MI400 GPUs and handles primary switching within the Helios rack’s compute tray. This chip connects to a larger rack-level switch chip (the UALink switch), akin to NVIDIA’s NVSwitch, managing data transmission across different racks. While designing compute tray switches is relatively easy, developing rack-level switch chips is more challenging. Currently, AMD is working with Astera Labs to develop this UALink scale-up switch chip for switch trays, expected to tape out in Q1 2026 and enter mass production in Q4 2026.

AWS has its own interconnect protocol called NeuronLink, which is essentially a modified version of PCIe for improved performance and support for overclocking. Currently, NeuronLink3 (used in Trainium 2/2.5) corresponds to PCIe5, and NeuronLink4 (used in next-gen Trainium 3) corresponds to PCIe7. Astera Labs has custom-built the Scorpio-X switch chip for AWS’s Trainium rack scale-up network. This Scorpio-X switch is software-programmable and can be configured as a NeuronLink switch to meet AWS's high-performance transmission requirements. Astera Labs plans to first launch a PCIe6-based scale-up switch in the second half of this year for AWS’s Trainium 2.5 Teton PDS rack. Meanwhile, Astera Labs will also tape out a dual-mode PCIe7/UALink 128G switch chip in Q3 2025, planned for mass production in Q2 2026 to pair with AWS’s Trainium 3 Teton Max rack.

In the following paragraphs, I will provide a detailed estimate on the content dollar that Astera Labs can generate from the AWS Trainium rack and AMD Helios rack.